How Does ULogViewer Read and Parse Logs

In this article:- Parts Included in Reading and Parsing Logs

- Log Data Sources

- Formatting Raw Logs before Parsing

- Parsing Information Defined in Log Profile

- Video Tutorial

- See Also

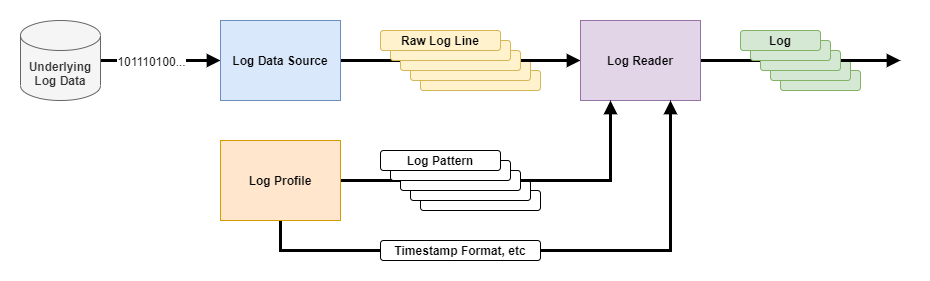

Parts Included in Reading and Parsing Logs

Underlying Log Data

This is the original source which provides raw log data outside from ULogViewer. It may be a file, a database or output of another program.Log Data Source

This is the first part of ULogViewer to read raw log data into ULogViewer. There are various type of Log Data Source in ULogViewer to handle different type of Underlying Log Data.Log Data Source reads raw log data and transforms into multiple Raw Log Line which is represented as string no matter what type of raw log data is. It is important to know what Raw Log Line will be generated by specific Log Data Source so that you can define how to parse these Raw Log Line into actual logs.

Log Profile

There are lots of information in Log Profile and part of them are related to logs parsing. For ex, log patterns, timestamp format, etc.Log Reader

Takes Raw Log Line generated by Log Data Source then parse and convert to actual logs according to information provided by Log Profile. ⬆️ Back to topLog Data Sources

File

File Log Data Source is the simplest type of Log Data Source which reads strings line by line from file directly. You can specify what encoding is used to read strings from file.Standard Output (stdout)

Launches a specific program and reads strings line by line in UTF-8 from the standard output/error (stdout/stderr) of the program. You need to define the Command first in order to launch program and pass parameters. For ex, ipconfig /all (on Windows). Furthermore, you can define extra commands which will be executed BEFORE or AFTER reading raw log data.Azure CLIPro

Launches Azure CLI and reads strings line by line in UTF-8 from the standard output/error (stdout/stderr) of it. You need to define the Command first in order to launch Azure CLI with parameters. ULogViewer will try to log in Azure before reading data from Azure CLI.HTTP/HTTPS

Sends HTTP/HTTPS request to specific URI and reads the response line by line. You can provide User Name and Password if login is needed for sending request.TCP Server

Create a TCP server which binds to specific IP/Port then read text content from client once client connect to the bound IP/Port.UDP Server

Create a UDP server which binds to specific IP/Port and read text once data sent to the bound IP/Port.MySQL/SQLite/SQL Server Database

Performs specific Query String (SQL command) and gets the result rows back. Because database result is not a set of strings, MySQL/SQLite/SQL Server Database Log Data Source will transform the result into strings COLUMN by COLUMN and ROW by ROW. For ex, if the result is| Column1 | Column2 | Column3 |

|---|---|---|

| A | B | C |

| D | E | F |

- A

- B

- C

- D

- E

- F

-

If the type is String, it will transform data into 1 line:

<ColumnName>Value encoded as XML string</ColumnName>Before v2.0, it will transform data into 3 or more lines:<ColumnName>

Value encoded as XML string

</ColumnName> -

If the type is Blob, it will encode data to Base64 string and transform into 1 line:

<ColumnName>Base64 encoded dataValue encoded as XML string</ColumnName>

-

If the type is Timestamp, it will transform into 1 line:

<ColumnName>Timestamp with format yyyy/MM/dd HH:mm:ss.ffffff</ColumnName>

-

For other types, it will transform into 1 line:

<ColumnName>Value</ColumnName>

| ID | Timestamp | Message |

|---|---|---|

| 1 | 1/1 10:08 | First message |

| 2 | 1/1 10:09 | <Second message> |

<ID>1</ID>

<Timestamp>2021/1/1 10:08:00.000000</Timestamp>

<Message>First message</Message>

<ID>2</ID>

<Timestamp>2021/1/1 10:09:00.000000</Timestamp>

<Message><Second message></Message>

<Timestamp>2021/1/1 10:08:00.000000</Timestamp>

<Message>First message</Message>

<ID>2</ID>

<Timestamp>2021/1/1 10:09:00.000000</Timestamp>

<Message><Second message></Message>

Windows Event Logs Windows

Gets structured Windows Event Logs data from system and transform each Event Log to strings in the following format:

<Timestamp>Timestamp of log in yyyy/MM/dd HH:mm:ss format</Timestamp>

<EventId>Event ID</EventId>

<Level>Level character</Level>

<Source>Source of log encoded as XML string</Source>

<Message>

Message of log encoded as XML string

</Message>

For Level character, please refer to the following table:

<EventId>Event ID</EventId>

<Level>Level character</Level>

<Source>Source of log encoded as XML string</Source>

<Message>

Message of log encoded as XML string

</Message>

| Character | Level |

|---|---|

| e | Error |

| f | Fatal |

| i | Info |

| s | Success |

| w | Warn |

Windows Event Log File v3.0+

Gets structured Windows Event Logs data from Windows XML Event Log (*.evtx) file and transform each Event Log to strings in the following format:

<Timestamp>Timestamp of log in yyyy/MM/dd HH:mm:ss format</Timestamp>

<Computer>Computer name as XML string</Computer>

<UserName>User name as XML string</UserName>

<Category>Category of event log as XML string</Category>

<ProcessId>Identifier of process (PID)</ProcessId>

<ThreadId>Identifier of thread (TID)</ThreadId>

<EventId>Event ID</EventId>

<Level>Level character</Level>

<SourceName>Source of log encoded as XML string</SourceName>

<Message>

Message of log encoded as XML string

</Message>

For Level character, please refer to the following table:

<Computer>Computer name as XML string</Computer>

<UserName>User name as XML string</UserName>

<Category>Category of event log as XML string</Category>

<ProcessId>Identifier of process (PID)</ProcessId>

<ThreadId>Identifier of thread (TID)</ThreadId>

<EventId>Event ID</EventId>

<Level>Level character</Level>

<SourceName>Source of log encoded as XML string</SourceName>

<Message>

Message of log encoded as XML string

</Message>

| Character | Level |

|---|---|

| e | Error |

| i | Info |

| v | Verbose |

| w | Warn |

ULogViewer realtime log

Read strings line by line from internal log buffer of ULogViewer. You can use it to debug or analyze behavior of ULogViewer or user-defined script. ⬆️ Back to topFormatting Raw Logs before Parsing

Sometimes the raw logs may be difficult to be parsed because of format. For example, unexpected line breaks or undefined ordering of properties. Therefore, ULogViewer supports formatting raw logs while reading them from log data source in order to help you to define Log Patterns later to parse raw log lines.Formatting JSON Data

When option enabled, the raw logs will be treated as JSON data and will be formatted in specific patterns. For example, if the raw logs are:

{ "timestamp": "2024/1/1 10:08", "level": "INFO", "message": "log message 1" }

{ "timestamp": "2024/1/1 10:09", "level": "WARN", "message": "log message 2", "extras": [ 1, 2, 3 ] }

Then they will be formatted into:

{ "timestamp": "2024/1/1 10:09", "level": "WARN", "message": "log message 2", "extras": [ 1, 2, 3 ] }

{

"timestamp": "2024/1/1 10:08",

"level": "INFO",

"message": "log message 1"

}

{

"timestamp": "2024/1/1 10:09",

"level": "WARN",

"message": "log message 2",

"extras":

[

1,

2,

3

]

}

The option is available in File, Standard Output (stdout) and HTTP/HTTPS log data sources.

"timestamp": "2024/1/1 10:08",

"level": "INFO",

"message": "log message 1"

}

{

"timestamp": "2024/1/1 10:09",

"level": "WARN",

"message": "log message 2",

"extras":

[

1,

2,

3

]

}

Formatting CLEF Datav4.1+Pro

When option enabled, the raw logs will be treated as CLEF data. The message property of each log will be generated according to its message template and related arguments, and all properties of each log will be reordered in:- Timestamp (@t, optional)

- Event (@i, optional)

- Level (@l, optional)

- Exception (@x, optional)

- Message (@m)

@r property is currently not supported by ULogViewer.

For example, if the raw logs are:

{ "@t": "2024/1/1 10:08", "@m": "log message 1", "@l": 0 }

{ "@t": "2024/1/1 10:09", "@mt": "state: {state}", "@l": 1, "state": "SUCCESS" }

{ "@t": "2024/1/1 10:10", "@mt": "structured state: {@state}", "@l": 2, "state": { "id": 0, "description": "None" } }

Then they will be formatted into:

{ "@t": "2024/1/1 10:09", "@mt": "state: {state}", "@l": 1, "state": "SUCCESS" }

{ "@t": "2024/1/1 10:10", "@mt": "structured state: {@state}", "@l": 2, "state": { "id": 0, "description": "None" } }

{

"@t": "2024/1/1 10:08",

"@l": 0,

"@m": "log message 1"

}

{

"@t": "2024/1/1 10:09",

"@l": 1,

"@m": "state: SUCCESS"

}

{

"@t": "2024/1/1 10:10",

"@l": 2,

"@m": "structured state: { \"id\": 0, \"description\": \"None\" }"

}

The option is available in File, Standard Output (stdout) and HTTP/HTTPS log data sources.

⬆️ Back to top

"@t": "2024/1/1 10:08",

"@l": 0,

"@m": "log message 1"

}

{

"@t": "2024/1/1 10:09",

"@l": 1,

"@m": "state: SUCCESS"

}

{

"@t": "2024/1/1 10:10",

"@l": 2,

"@m": "structured state: { \"id\": 0, \"description\": \"None\" }"

}

Parsing Information Defined in Log Profile

Log Patterns

After knowing what Raw Log Line will be generated by Log Data Source, you need to define Log Pattern to parse and extract information from Raw Log Line.Each Log Pattern is defined for specific single Raw Log Line. If each log can be generated from single Raw Log Line then you just need one Log Pattern. If each log may be generated by more than one Raw Log Line then you need to define multiple Log Pattern for each type of Raw Log Line in single log.

For example:

- If each log will be generated by Raw Log Line (Type 1) then you just need to define a Log Pattern for Raw Log Line (Type 1).

-

If each log will be generated by 3 type of Raw Log Line:

- Raw Log Line (Type 1)

- Raw Log Line (Type 2)

- Raw Log Line (Type 3)

- Log Pattern 1 for Raw Log Line (Type 1)

- Log Pattern 2 for Raw Log Line (Type 2)

- Log Pattern 3 for Raw Log Line (Type 3)

If there is no Log Pattern defined then Raw Log Line will be treated as Message of log directly. Therefore, you can see Raw Log Line in viewer by skipping defining Log Patterns.

There are 3 parameters in each Log Pattern:

Pattern

Describe the string pattern of Raw Log Line by Regular Expression. Furthermore, you need to use Named Groups to extract data from Raw Log Line for specific properties of log.Please refer to here for quick-start of using Regular Expressions.

Is Repeatable

If the specific type of Raw Log Line may occur more than one times continuously, then you can mark the Log Pattern as repeatable.Is Skippable

If the specific type of Raw Log Line may not occur, then you can mark the Log Pattern as skippable. Usually you need to take both Is Repeatable and Is Skippable into account according to the following conditions:| Not repeatable | Repeatable | |

|---|---|---|

| Not skippable | Raw Log Line should occur exact 1 time | Raw Log Line should occur at least 1 time continuously |

| Skippable | Raw Log Line should occur at most 1 time | Raw Log Line may not occur or occur continuously |

Way of matching log lines with patternsv3.0+

There are 3 ways of matching raw log lines:Sequentially

The default way to match raw log lines. Starting from first raw log line and first pattern:-

Pattern matched

Reading next raw log line. If pattern is not repeatable then moving to next pattern, or moving to first pattern and generate a log if this is the last pattern. -

Pattern not matched

If pattern is skippable then moving to next pattern, or moving to first pattern and generate a log if this is the last pattern. If pattern is non-skippable then moving to first pattern and drop all intermediate log properties.

Arbitrary order

Use arbitrary pattern to match each log line. Generate a log when all non-skippable patterns matched.Arbitrary order after first one

Using the first pattern to match raw log lines. When first pattern matched, start matching other raw log lines by the way same as Arbitrary order.

The first pattern should be non-skippable. Or it will be treated as Arbitrary order.

Log Level Map

If information of Level of log has been extracted from Raw Log Line by Log Pattern, we need a table to describe how to convert from String to Level of log. If the table doesn’t exist, Level of log will be Undefined.For example, if valid set of Level of log is { Success, Failure } and you know that ‘S’ means Success and ‘F’ means Failure in Raw Log Line, then you need to provide the following Log Level Map:

| Text | Mapped Level |

|---|---|

| F | Failure |

| S | Success |

Log String Encoding

Consider the format of Raw Log Line, the data in Raw Log Line may be encoded in specific way to prevent conflict with the format. For example, Raw Log Line generated by SQLite Log Data Source is XML-like format, so the value inside Raw Log Line will be encoded as XML/HTML string.Timestamp type

There are 4 type of timestamp supported for parsing timestamp from Raw Log Line:- Unix Timestamp in seconds. Seconds with decimal place is supported.

- Unix Timestamp in milliseconds. Milliseconds with decimal place is supported.

- Unix Timestamp in microseconds. Microseconds with decimal place is supported.

- Custom.

Timestamp format

The format of timestamp of log extracted from Raw Log Line varies between different type of logs. If you found that ULogViewer cannot parse the timestamp correctly by default way, you can set Timestamp type to Custom and provide the exact format to parse timestamp correctly.Please refer to here for complete reference of date time format.

Time span type

Sometimes the time in Raw Log Line are represented in “Relative time” instead of exact timestamp. In this case you can extract and parse time from Raw Log Line as Time span. There are 7 type of time span supported:- Total days. For ex, 1.5 days which means 1 day and 12 hours.

- Total hours. For ex, 20.5 hours which means 20 hours and 30 minutes.

- Total minutes.

- Total seconds.

- Total milliseconds.

- Total microseconds.

- Custom.

Time span format

Just like Timestamp format, you can set Time span type to Custom and provide the exact format to parse time span correctly.Please refer to here for complete reference of time span format. ⬆️ Back to top